What is Docker and why it is so popular in 2026

Last updated: Feb 9, 2026

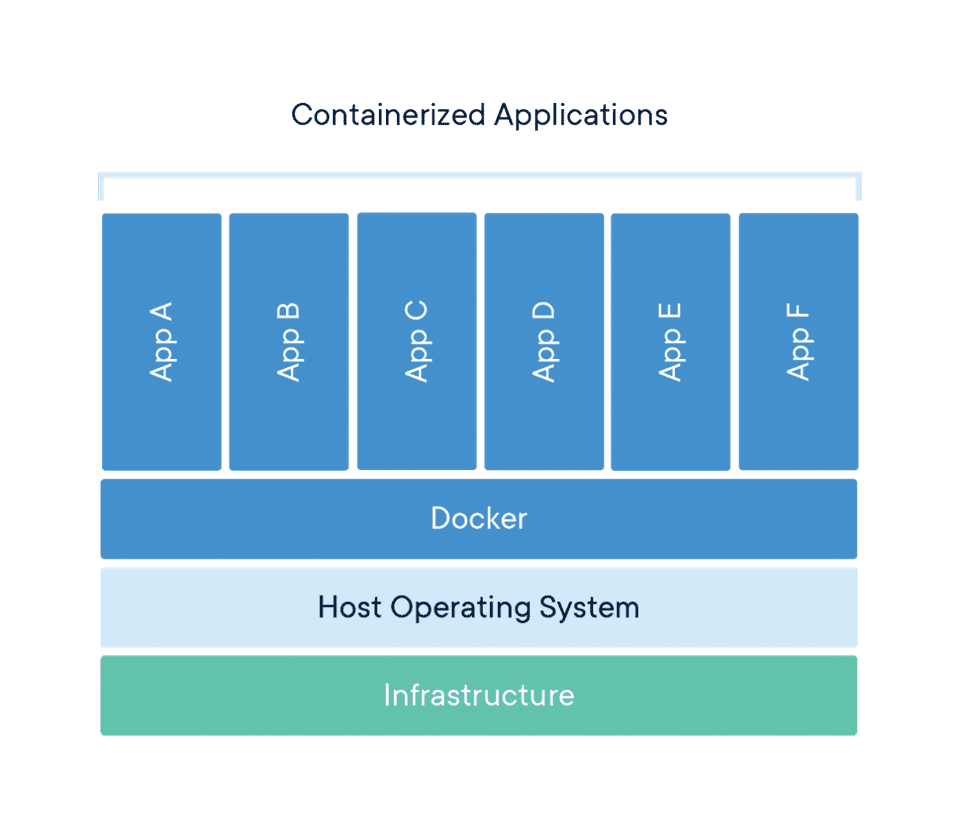

Docker is a tool designed to make it easier for developers to create, deploy, and run applications by using containers. Containers allows a developer to deploy an application as one package, which consists of all the required libraries, system tools, code and dependencies. Now, the developer can rest assured that the application will run on any other machine. The applications execution environment share operating system kernel but otherwise run in isolation from one another.

Consider containers as an extremely lightweight and modular virtual machines. You can easily create, deploy, copy, delete, and move these containers from environment to environment. They isolate applications execution environments from one another, but share the underlying OS kernel.

The Docker technology uses the Linux kernel and it’s features like Cgroups, which govern the isolation and usage of system resources, such as CPU and memory, for a group of processes and namespaces, which wrap a set of system resources and present them to a process to make it look like they are dedicated to that process; to segregate processes so they can run independently. This independence is the intention of containers—the ability to run multiple processes and apps separately from one another to make better use of your infrastructure while retaining the security you would have with separate systems.

Most business applications consist of several separate components: a web server, a database, an in-memory cache. Containers make it possible to compose these pieces into an individual functional unit. Each piece can be maintained, updated, swapped out, and modified independently of the others. This is essentially the microservices model of application design. By dividing application functionality into separate, self-contained services. Lightweight and portable containers make it easier to build and maintain microservices-based applications.

Download

https://www.docker.com/products/docker-desktop

Test Docker

$ docker --version

Docker version 19.03.13, build 4484c46d9d

$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

ca4f61b1923c: Pull complete

Digest: sha256:ca0eeb6fb05351dfc8759c20733c91def84cb8007aa89a5bf606bc8b315b9fc7

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

...

$ docker ps --all

CONTAINER ID IMAGE COMMAND CREATED STATUS

54f4984ed6a8 hello-world "/hello" 20 seconds ago Exited (0) 19 seconds ago

Important Terminologies

-

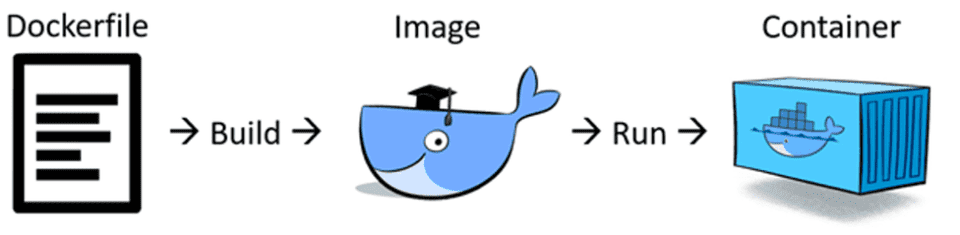

Dockerfile

- Each Docker container starts with a Dockerfile. A Dockerfile is a text file written in an easy-to-understand syntax that includes the instructions to build a Docker image. A Dockerfile specifies the container’s operating system, languages, environmental variables, file locations, network ports, other components it needs and, what the container will actually be doing once we run it.

- Dockerfile Commands

- FROM — this initializes a new build stage and sets the Base Image for subsequent instructions. As such, a valid Dockerfile must start with a FROM instruction.

- RUN — will execute any commands in a new layer on top of the current image and commit the results. The resulting committed image will be used for the next step in the Dockerfile.

- ENV — sets the environment variable

to the value . This value will be in the environment for all subsequent instructions in the build stage and can be replaced inline in many as well. - EXPOSE — informs Docker that the container listens on the specified network ports at runtime. You can specify whether the port listens on TCP or UDP, and the default is TCP if the protocol is not specified. This makes it possible for the host and the outside world to access the isolated Docker Container

- VOLUME — creates a mount point with the specified name and marks it as holding externally mounted volumes from the native host or other containers.

- Sample dockerfiles: https://github.com/sanmak/dockerfile-samples.

Node Dockerfile Example:

FROM node:12.18-alpine

ENV NODE_ENV=production

WORKDIR /usr/src/app

COPY ["package.json", "package-lock.json*", "npm-shrinkwrap.json*", "./"]

RUN npm install --production --silent && mv node_modules ../

COPY . .

EXPOSE 80

CMD ["npm", "start"]

-

Docker image

- Docker image is a portable file containing the specifications for which software components the container will run and how. Once Dockerfile is written, invoke the Docker build utility to create an image based on that Dockerfile. Once an image is created, it’s static.

$ docker images -

Docker run

- Docker run utility is the command that actually launches a container. Each container is an instance of an image. Containers are designed to be transient and temporary, but they can be stopped and restarted, which launches the container into the same state as when it was stopped. Further, multiple container instances of the same image can be run simultaneously.

$ docker run -p 80:80 -d node-test -

Docker Hub

- Docker Hub is a SaaS repository for sharing and managing containers, where you will find official Docker images from open-source projects and software vendors and unofficial images from the general public. You can download container images containing useful code, or upload your own, share them openly, or make them private instead.

-

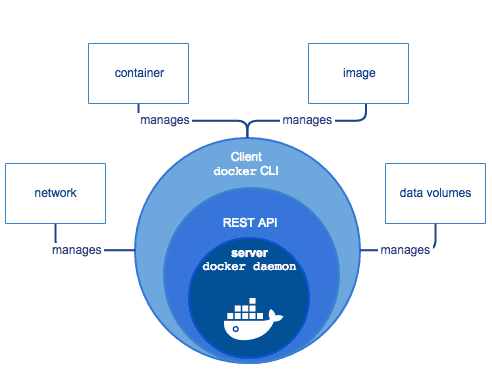

Docker Engine

- Docker Engine is the core of Docker, the underlying client-server technology that creates and runs the containers. Generally speaking, when someone says Docker generically and isn’t talking about the company or the overall project, they mean Docker Engine.

Explanatory Video

Here are the commonly used commands

- docker build — builds an image from a Dockerfile

- docker images — displays all Docker images on that machine

- docker run — starts container and runs any commands in that container there’s multiple options that go along with docker run including

- -p — allows you to specify ports in host and Docker container

- -it — opens up an interactive terminal after the container starts running

- -v — bind mount a volume to the container

- -e — set environmental variables

- -d — starts the container in daemon mode (it runs in a background process)

- docker rmi — removes one or more images

- docker rm — removes one or more containers

- docker kill — kills one or more running containers

- docker ps — displays a list of running containers

- docker tag — tags the image with an alias that can be referenced later (good for versioning)

- docker login — login to Docker registry

These commands can be combined in ways too numerous to count, but here’s a couple simple examples of Docker commands.

$ docker build -t node-test .

This says to Docker: build (build) the image from the Dockerfile at the root level ( . ) and tag it -t as node-test. Don’t forget the period — this is how Docker knows where to look for the Dockerfile.

$ docker run -p 80:80 -d node-test

This tells Docker to run (run) the image that was built and tagged as node-test, expose port 80 on the host machine and look for port 80 inside the Docker container (-p 80:80), and start the process as a background daemon process (-d).

Docker Compose, Docker Swarm, and Kubernetes

Docker also makes it easier to coordinate behaviors between containers, and thus build application stacks by connecting containers together.

Docker Compose was created by Docker to simplify the process of developing and testing multi-container applications. It’s a command-line tool, reminiscent of the Docker client, that takes in a specially formatted descriptor file to assemble applications out of multiple containers and run them in concert on a single host.

More advanced versions of these behaviors—what’s called container orchestration—are offered by other products, such as Docker Swarm and Kubernetes. But Docker provides the basics. Even though Swarm grew out of the Docker project, Kubernetes has become the de facto Docker orchestration platform of choice.

Ending Note

You can use Docker containers as a core building block creating modern applications and platforms. Docker makes it easy to build and run distributed microservices architectures, deploy your code with standardized continuous integration and delivery pipelines, build highly-scalable data processing systems, and create fully-managed platforms for your developers.

Docker simplifies and accelerates your workflow, while giving developers the freedom to innovate with their choice of tools, application stacks, and deployment environments for each project.

Refer well written docker documentation https://docs.docker.com/get-started/.

Refer Sample dockerfiles: https://github.com/sanmak/dockerfile-samples.